Cloud 9 My Uploaded File .xlsx Is Not Recognized

Spring 2012 Edition

Importing Data from Excel Spreadsheets

Dos, don'ts, and updated procedures for ArcGIS 10

By Mike Price, Campaign/San Juan, Inc.

This article as a PDF.

What Y'all Will Demand

- ArcGIS 10 for Desktop

- Microsoft Excel 2010/2007 or 2003 or the 2007 Function System Driver

- Sample dataset

This exercise models data from a well-known gold and base of operations metals mining area in northern Nevada located well-nigh the town of Boxing Mountain.

Many organizations go on valuable data in Microsoft Excel and comma-separated values (CSV) files. Larn a methodology for importing information kept in Excel and CSV files into ArcGIS that has been updated for ArcGIS ten and Microsoft Part 2007/2010.

Excel spreadsheets have been used since the release of ArcGIS eight to ready and import tabular data into a GIS. Previous ArcUser articles described the benefits and limitations of spreadsheets in the version of ArcGIS current at that time. In early 2004, ArcUser editor Monica Pratt wrote "Working with Excel in ArcGIS." In 2007, the author wrote another article on the aforementioned topic, "Mapping and Modeling Groundwater Geochemistry."

Since these articles were published, Microsoft has released 2 new versions, Excel 2007 and Excel 2010. With each release, spreadsheet capabilities have improved and the processes for importing information into ArcGIS have changed. This article updates and refines rules and procedures for importing Excel 2003 files into ArcGIS 9.x.

Although the sample data is synthetic, it is true to the underlying geology of Boxing Mountain, Nevada.

This exercise reexamines the Excel spreadsheet as a data import tool, focusing on ArcGIS 10 and Excel 2007/2010. The tutorial uses spreadsheets to create and enhance geologic data. Field samples include Hydrogeochemical Stream Sediment Reconnaissance (HSSR) points plus custom soil and rock data. In this exercise, we will model a well-known gold and base of operations metals mining expanse in northern Nevada, located near the boondocks of Battle Mountain. The custom samples are typical of information that might come from the field, assayed past a modernistic analytic laboratory.

A Discussion almost Microsoft Excel Versions

If y'all have installed Office 2007, yous can read .xls and .xlsx files. If yous have Office 2003 or 2010 installed, you can read .xls files, but you will demand to install the 2007 Office Arrangement Driver to read .xlsx files.

If you do not have Microsoft Excel installed, y'all must install the 2007 driver before you can use either .xls or .xlsx files. The 2007 Office Organization Driver can exist downloaded from the Microsoft Download Center. Carefully follow the installation instructions before you restart ArcGIS.

Also, if yous take previously specified on the File Types tab of the Customize > ArcCatalog Options dialog box that ArcCatalog prove you lot .xls files, y'all'll need to remove this file type to be able to access Excel files directly.

Before offset to work the exercise, read the accompanying article, "All-time Practices When Using Excel Files with ArcGIS," for valuable tips on working with Excel data

Getting Started: Examining Files in ArcCatalog

Preview the sample data in ArcCatalog.

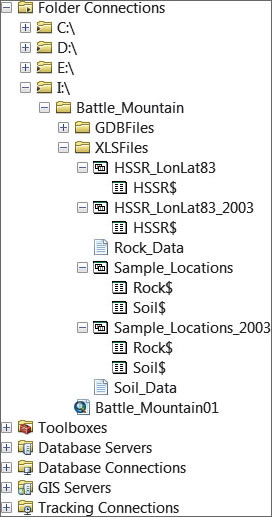

To begin this exercise, download the training data. Unzip the excelmagic.goose egg data into a project expanse on your local machine and starting time ArcCatalog.

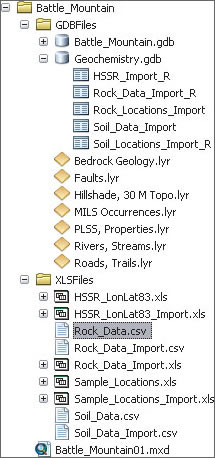

Navigate to the Battle_Mountain binder and locate the XLSFiles folder. When ArcCatalog displays an Excel file, information technology adds a dollar sign ($) to each worksheet proper noun. Inside this folder, expand all files. Locate Sample_Locations.xlsx and preview Rock$. This Excel 2010 spreadsheet contains two worksheets named Rock$ and Soil$. Rock$ and Soil$ contain sample numbers, universal transverse Mercator (UTM) coordinates, and field information that allow this information to be posted on a map. Next, preview HSSR_LonLat83.xlsx and study its only worksheet, HSSR$.

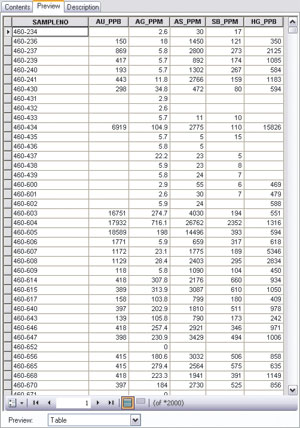

Next, locate and preview two CSV files, Rock_Data and Soil_Data. These files comprise companion analytic data for the Stone$ and Soil$ worksheets. The [SAMPLENO] field in both CSV files volition support a one-to-1 tabular join with the same field in the Soil$ and Rock$ worksheets.

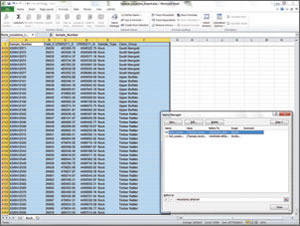

Closely inspect the alignment of information in Soil_Data columns. Detect that [SAMPLENO] and [SB_PPM] are aligned on the left side of the column while [AU_PPB], [AG_PPM], [AS_PPM], and [HG_PPB] are aligned on the correct. Scroll down through the table and observe that many fields in the right-aligned columns are empty. In the source CSV file, many of the fields comprise nonnumeric strings that practice not display properly.

Detect that [SB_PPM], a left-aligned field, contains many fields that brainstorm with a less than (<) character. When a geochemical lab is unable to measure the presence of an element, the analytic posting volition include a less than character, followed by the detection limit value. In [SB_PPM], the detection limit for antimony is five parts per million, and many samples contain less than this threshold value.

When ArcGIS reads an Excel worksheet table, it uses the commencement eight rows to define the field format. If those first eight rows incorporate mixed data types in a single field, that field volition be converted to a string field, and the values independent in that field will exist converted to strings. When ArcGIS reads a CSV file, the very start record defines the field type. Consequently, some rather detailed data grooming will be necessary before you can apply these files. The next step will be to ready the spreadsheet and CSV data for import into ArcGIS. Shut ArcCatalog.

After field names have been corrected, create a named range in Excel called Rock_Locations_Import_R.

Preparing Excel Information for Importation

These detailed instructions are specifically for Excel 2007 and Excel 2010. If you desire to try this exercise using Excel 2003, open Sample_Locations_2003.xls instead.

- Starting time Excel 2007 or 2010 and open up \Battle_Mountain\XLSFiles\Sample_Locations.xlsx. Open the Soil worksheet and inspect the data. This location tabular array contains 20,096 soil sample points, posted in UTM N American Datum 1983 (NAD83) Zone 11 Meters. Coordinates are posted and displayed using a precision of 0.01 meters. Many samples are coded by Merits Group.

- Relieve this spreadsheet as a new file so you can retain the original data every bit an archive. Name the new file Sample_Locations_Import.xls.

- Click the Soil$ worksheet and await at the kickoff row of data. Many text strings in this row contain spaces. Change these spaces to underscores. (Hint: Select only the first row and utilize Find and Supervene upon.)

- Side by side, analyze the coordinate organisation columns. Change Easting to UTM83Z11_E and Northing to UTM83Z11_N.

- Now define a named range. Move to jail cell A1. Notice that the titles are locked, then tap the F8 key to begin to extend a cell range. Hold down the Ctrl fundamental and tap End to stretch the range (highlighted in cyan) to the lowest rightmost jail cell. Make certain the header fields are included in the highlighted area.

- In the Excel ribbon, select Formulas, click Proper name Managing director, and click the New button. Proper name the new range Soil_Locations_Import_R. Click OK and close the Name Manager.

- Press the Ctrl and Habitation keys to return to the upper left live cell. Click the Name Box drop-down, located just above cell A1, to select and verify your range name. Save the spreadsheet file.

- Switch to the Stone$ worksheet and review this information. Brand the same types of modifications to this worksheet to enforce correct field naming conventions. Brand sure you have advisable field names in the first row. (Hint: The tabular structure for the Soil$ and Stone$ worksheets is the same, and then you lot can use the same procedure you used on Soil$.) Create a new range name chosen Rock_Locations_Import. Verify the new range and relieve the file.

When CSV files are viewed in ArcCatalog, many records have blank fields.

Prepare a Composite Spreadsheet

HSSR_LonLat83.xlsx contains 96 sample sites nerveless as part of the HSSR back in the 1970s and 1980s. This information is ofttimes used as part of a regional reconnaissance program. Set the HSSR data for import.

- In Excel, open HSSR_LonLat83.xlsx. Save a copy to work with and proper noun it HSSR_LonLat83_Import.xlsx. Inspect this data and set up any field headers that don't follow the rules. Make sure to cheque for spaces in field header names.

- The major fix will be to change the percent (%) symbol to the letters PCT. Use Replace past selecting the first row and pressing Ctrl + R to perform that task quickly.

- Create a named range chosen HSSR_Import_R and make sure it includes those header fields. Save this file.

Managing CSV Files in a Text Editor

At present to prepare the Rock and Soil analytic data for proper import—a much more difficult task. Starting time, you volition use a text editor to ready Soil_Data.csv.

- Using Windows Explorer (or another file manager), navigate to \Battle_Mountain\XLSFiles and open Soil_Data.csv in WordPad. (Notation: If CSV files are opened in Excel by default on your machine, right-click the file, choose Open With, and select WordPad.)

- Immediately save this file every bit Soil_Data_Import.csv.

- Detect that field names are properly constructed and that much of the analytic information is numeric. Even so, at that place are many records that contain < characters earlier a numeric value.

These records each contain less than the minimum detectable amount of a specific element. Sometimes, a sample contains more than a maximum detectable amount. These samples are usually coded with a greater than (>) symbol (e.1000., >10,000 for gilt). Fortunately, the over-limit samples in this dataset have already been resolved, so just the less than values demand fixing.

Since it is statistically meaningful to recognize that some small corporeality of each chemical element exists in all samples, it is not appropriate to change all < values to naught. Instead, change them to a smaller absolute value, typically 20 to 50 percentage of the detection limit. Accept a more conservative approach and employ 20 percent. Table 1 lists the current value and smaller absolute value for elements below the minimum detection limit.

| Element | Abbr. | Unit | Detection Limit | Change From | Alter To |

|---|---|---|---|---|---|

| Antimony | Sb | PPM | v.0 ppm | <v | 1 |

| Arsenic | As | PPM | 5.0 ppm | <five | i |

| Golden | Au | PPB | five.0 ppb | <five | 1 |

| Mercury | Hg | PPB | 10.0 ppb | <10 | 2 |

| Silver | Ag | PPM | 0.5 ppm | <0.5 | 0.1 |

Table 1: Elements below detection limit in sample data

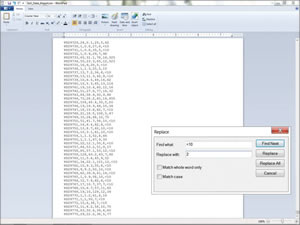

- In WordPad, motility to the height of the document and begin by replacing the values for antimony. Select Replace (shortcut: press and hold the Ctrl and H keys) and (based on the values shown in Tabular array ane) fix Find to < 0.five and Supercede All to 0.i. Use Find and Supplant All to modify all values below the detection limit for arsenic, aureate, mercury, and silver with the values shown in Tabular array 1. When finished with the last chemical element, perform one more Find for both < and > symbols to confirm that all the above and below limit values were found. You will encounter that the one sample below the minimum level for silver (Ag) was listed equally <0.2 rather than <0.5. Modify information technology to 0.1. Relieve and shut the file.

When the aforementioned file is viewed in WordPad, blank fields contain values preceded past a < or > symbol. These signal values beneath the detection limits and volition be replaced using values in Table 1.

Managing CSV Files in Excel

Now, effort a like approach with Rock_Data.csv, using Excel to supersede undesirable values. This approach is much more powerful, but also unsafe.

The danger with using Excel to edit and format analytic data lies in how information technology uses leading zeros to manage alphanumeric strings when all other characters are numeric. Excel tends to catechumen leading nil strings to numeric values, which forever changes the data. This tin can be specially dangerous when working with datasets such as tax parcels and lab samples. All the same, if the file is saved from Excel back into a CSV file, the leading zeros are gone forever and there are no problems.

- In Windows Explorer, notice Rock_Data.csv and open it in Excel. Immediately save the file equally Rock_Data_Import.xls so yous have the original CSV file and this re-create in Excel.

- Inspect the file and expect for improper field names and inappropriate data formats. Note that numeric data aligns on the correct side of a cell, while alphanumeric data aligns on the left. Note that alphanumeric data that did not show up in most fields when previewed in ArcCatalog is at present visible in Excel.

- Repeat the same Find and Supersede All steps performed on Soil_Data_Import.csv in WordPad using the replacement values in Table 1. Note that the numeric values align on the right.

- Search for the < and &tt; characters to confirm that all the above and below limit values accept been establish. Another sample beneath the minimum level for argent (Ag) was listed equally <0.2 rather than <0.v. Edit the cell manually to modify the value to 0.1. Save the file.

- Next, manually format columns to reinforce numeric data formats. Select Column A by right-clicking its header, select Format Cells, and cull Text. Right-click columns B, D, E, and F and format as Number with no (0) decimal places. Right-click Column C and format as Number with ane decimal place.

- Save the file as a CSV file, then reopen it in Excel and save it equally an XLS file.

- In the last step in preparing the Rock_Data_Import.xls, create a named range containing all the cells and name it Rock_Data_Import. Now the data can be imported into an ArcGIS geodatabase. Save the file and close Excel.

One time all tables have been carefully prepared, they are imported into a new file geodatabase chosen Geochemistry.

Building the Geodatabase (Finally)

As the last step in this exercise, you volition create a geochemistry geodatabase and import the Excel named ranges and CSV files.

- Open up ArcCatalog and navigate to \Battle_Mountain\GDBFiles. Preview the Battle_Mountain.mxd file and review the layer files and the geodatabase layers in the Battle_Mountain file geodatabase.

- Correct-click the Battle_Mountain\GDBFiles folder, select New > File Geodatabase, and proper noun it Geochemistry.

- Showtime, exam a single tabular array import of an Excel named range by correct-clicking the new Geochemistry geodatabase and selecting Import > Table (single).

- In the Table to Table wizard, prepare Input Rows by browsing to \Battle_Mountain\XLSFiles and opening Sample_Location_Import.xls. In the Input Rows dialog box, select Rock_Locations_Import_R.xls, the named range created previously. Name the output table Rock_Locations_Import, accept all other defaults, and click OK. The rock sample locations are added as a geodatabase table.

- Open the table and verify that the import was successful. Pay special attention to field names and formats to make sure they were imported correctly. If non, check that you lot imported the correct file and that the named range included the field names. Make any corrections and reimport the file.

- Continue populating the geodatabase by adding the rest of the tables. Right-click the Geochemistry geodatabase and select Import > Table (multiple).

- In the Table to Geodatabase (multiple) wizard, load all remaining tables, including 3 tables created from Excel tables located in \Battle_Mountain\XLSFiles:

\Sample_Locations_Import.xls\Soil_Locations_Import_R

\HSSR_LonLat83.xls\HSSR_Import_R

\Rock_Data_Import.xlsx\Rock_Data_Import_R

and one CSV file:

\Soil_Data_Import.csv. - Click OK and watch as the four files are imported.

Because you carefully defined these import datasets, the ArcGIS data geoprocessing function readily uses the assigned names.

Finally, open up each tabular array in ArcCatalog and verify field names, formats, and tape counts. You successfully outmaneuvered those catchy % characters. Finally, remove the _Import from each geodatabase table name and have a break.

Conclusion

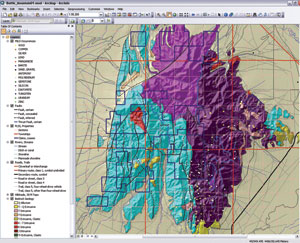

If you lot preview the Battle Mount geologic map or open up the Battle Mountain MXD, you volition see the bedrock geology, geologic structure, and mineral occurrences in the written report expanse. Wouldn't it be interesting to place all these rock, soil, and stream sediment samples in this model and get prospecting? This model is designed to practice just that. The geochemical data tin exist used to clarify favorable ratios betwixt multiple elements, define spatial relationships betwixt rock units and faulting, and compare your data to current mines and by producers.

Acknowledgments

The data used in this exercise was originally developed every bit role of an ArcView GIS 3 mining training program. While the sample data is synthetic, it is true to the underlying geology. While landownership is imaginary, it reflects exploration trends effectually Boxing Mountain, Nevada, in the early on 1990s. Bedrock geology was derived from the Nevada Bureau of Mines and Geology County mapping series. HSSR data was developed through the US Department of Energy National Uranium Resource Evaluation (NURE) program. All information has been transformed from UTM North American Datum 1927 (NAD27) into the current NAD83 datum.

Source: https://www.esri.com/news/arcuser/0312/importing-data-from-excel-spreadsheets.html

Postar um comentário for "Cloud 9 My Uploaded File .xlsx Is Not Recognized"